DeepHLS V1.0: A complete toolchain for automatic synthesis of deep neural networks to FPGA:

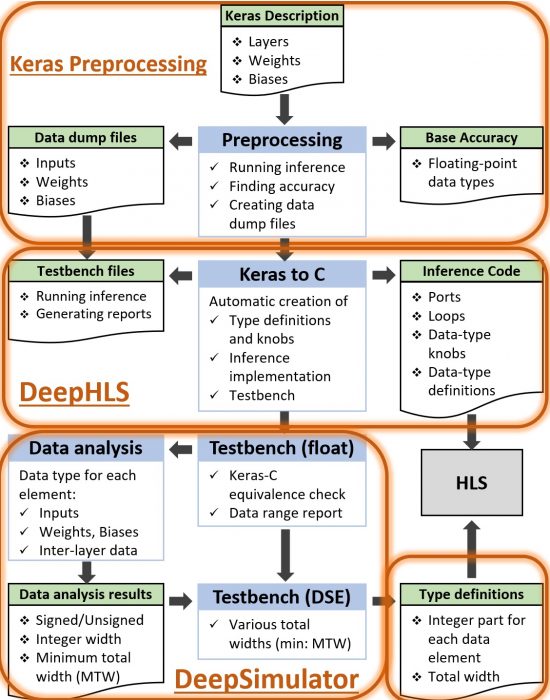

DeepHLS is a fully automated toolchain for creating a C-level implementation that is synthesizable with HLS Tools. It includes various stages, including Keras to C, Validation, Quantization analysis, and Quantization application. Thanks to its various scalability features, it supports very large deep neural networks such as VGG.

Preprocessing: Before creating the C implementation begins, it is needed to extract the required data for the DNN under process. Preprocessing receives a trained DNN, which contains layers’ specifications, weights, and biases.

Keras to C: This stage is the main part of creating the C implementation. In this stage, Keras [2, 3] implementation is processed to extract all the required information.

In creating C implementation, several measures are taken to enhance the usability and performance of the circuit which is being generated based on it:

- The code is created such that it can be adequately synthesized and with high performance on an FPGA.

- The generated C code is able to use various data types for each of the data elements such as inputs, outputs, weights, biases, and layer data. They may all have the same data type, either FlP or FxP, with arbitrary precision. It is also possible to have different FxP configurations (binary point position) for each data element.

- The code created for inference does not only perform the main math operations but also embodies features that, when the testbench executes it, it can monitor and save all the data elements.

- For layer data, both internal FPGA storage (such as BRAM) or external memory (such as DDR) can be used to be able to implement even very large networks with many layers or large layers dimensions.

- Input data sets, especially when many of them are to be applied, can be very large. Therefore, to ensure scalability, File-to- Memory mechanisms are included in the testbench.

- The inference code to be synthesized is fully flat (without using functions) and in a single file. This is very important because firstly, calls to the functions add extra states and latency when synthesized, and secondly, a flat implementation allows the HLS tool to make inter-layer optimizations.

- All for loops have labels. This will lead to more readable HLS reports. Additionally, it allows the designers or tool developers to use external HLS directive files, e.g., directives.tcl file for Xilinx Vivado HLS.

Running testbench in floating point mode: In this stage, testbench is executed using the FlP mode for all network data elements. There are two main objectives for this execution.

- When a Keras description of a DNNs is executed on a CPU or a GPU, FlP is used. Therefore, if the inference in C is executed using the same input data and the same type of data, it should have the same accuracy as the one obtained in the preprocessing stage as the base accuracy.

- Running the inference code in FlP mode provides us with the information we later need to assess the effects of FxP mode on accuary.

Data Analysis: At this stage, various data that were extracted and stored in the stage of running testbench in FlP mode are analyzed to select appropriate data types.

One of the three modes for the implementation and execution of the inference can be selected.

- Floating-point mode: In this mode, for all data elements in the DNN, the FlP data type is chosen.

- Single Fixed-point mode: An FxP data type configuration is determined by three main characteristics: number of bits in its integer portion (I), number of bits in its fractional portion (F), and being signed or unsigned (S). In this mode, all data types have the same FxP configuration.

- Multiple Fixed-point mode: In this mode, FxP data type is used for all data elements, with the difference that each of the FxP types can have different I and S. Note that W is equal for all types.

Running testbench for various data widths: To obtain the whole width (and, as a result, the width for the fractional part of each of them), it is necessary to examine different widths to evaluate their impact on the network accuracy.

References:

- [1] Riazati, Mohammad, Masoud Daneshtalab, Mikael Sjödin, and Björn Lisper. “DeepHLS: A complete toolchain for automatic synthesis of deep neural networks to FPGA.” In 2020 27th IEEE International Conference on Electronics, Circuits and Systems (ICECS), pp. 1-4. IEEE, 2020.

- [2] A. Gulli and S. Pal, Deep learning with Keras. Packt Publishing Ltd, 2017.

- [3] V. Dumoulin and F. Visin, “A guide to convolution arithmetic for deep learning,” arXiv preprint arXiv:1603.07285, 2016.